Using Artificial Intelligence tools in Claris FileMaker 2024

Large Language Models & Artificial Intelligence

Large Language Models (LLMs) are a type of artificial intelligence (AI) designed to understand and generate human-like language using deep learning techniques. They are built on transformer neural networks, which enable them to process and analyse vast amounts of text data, often spanning billions of parameters, to perform tasks such as text generation, translation and summarisation. Some prominant examples of LLMs include OpenAI GPT-4, Anthropic Claude, Google Gemini and DeepSeek.

These models have revolutionised natural language processing (NLP) by enabling machines to perform human-like communication tasks with remarkable accuracy.

How to use LLMs with FileMaker 2024

All of the LLMs mentioned above offer access via standard JSON/REST API interfaces and Claris FileMaker first introduced support for integrating with REST APIs using the cURL script step all the way back in FileMaker 16 (released in 2017). FileMaker 2024 massively simplifies the complexity of leverage LLMs by adding a bunch of native AI specific script steps including: Configure AI Account, Insert Embedding and Perform Semantic Find.

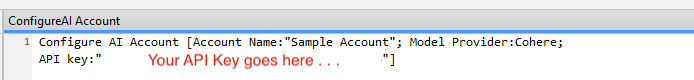

- Configure AI Account - allows you to specify an arbitrary internal reference for sending a specific LLM (i.e. 'DataTherapy Account with OpenAI'), along with the type of model (Open AI, Cohere and Custom local servers are current supported as of 17th Feb 2025) and the API key you have generated to access the LLM service.

- Insert Embedding - you specify the AI Account to use (set-up with Configure AI Account), the text data that needs to be input into the LLM, the specific model to use and then the target to insert the vectorised representation of that data that the LLM has generated (this would normally be inserted into a FileMaker Container field).

- Perform Semantic Find - carries out a find within the current found set of records, using the specified AI Account, either by natural language query or comparison to a vectorised text file.

Example use case for AI in FileMaker: CV Semantic Search for Recruitment

We have a number of clients using the FileMaker platform for Recruitment (as Consultants or in-house HR Teams). As you might expect, they have extensive databases with thousands (or tens of thousands) of Candidate profiles, usually with a summary of the Candidate's CV stored in a text field. Commonly, the first stage in generating a shortlist for a new role would be to whittle down potential candidates based on their availability, the seniority of the file and salary band, but this can still result in hundreds of suitable applicants that the Recruiter would need to look at or carry out keyword searches on their CV summary in the hope of getting down to a manageable list of 5-10 prospective candidates to contact.

Classic keyword searches can be exceptionally hit and miss - if you looking at CVs for a Marketing position then just about all candidates will mention 'Marketing' in their CV so that isn't a useful way to refine the search. Likewise, too specific a search term might incorrectly rule out candidates that are otherwise qualified - for example, if you searched for an exact match on 'B2B Marketing' then you would miss candidates which has 'B2B and Consumer Marketing' or 'Marketing (B2B)' mentioned on their CV summary.

Clearly, what we need here, is a search technology that focuses on understanding the meaning and the intent behind the Consultant's search query rather than just bluntly matching keywords and this is exactly what the semantic search does. If they did a semantic search for an 'Experienced B2B Marketing Professional with background in IT', then we would want to to pick up on candidates that might mention as varied phrases in their CV as 'Experienced B2B Marketeer', 'B2B Veteran', 'Cloud Services Marketing (B2B)', etc.

How to achieve this in FileMaker? First you need to register with a LLM provider and obtain an API key. Cohere offers a free trial account. Then you just need to set-up 3 script steps:

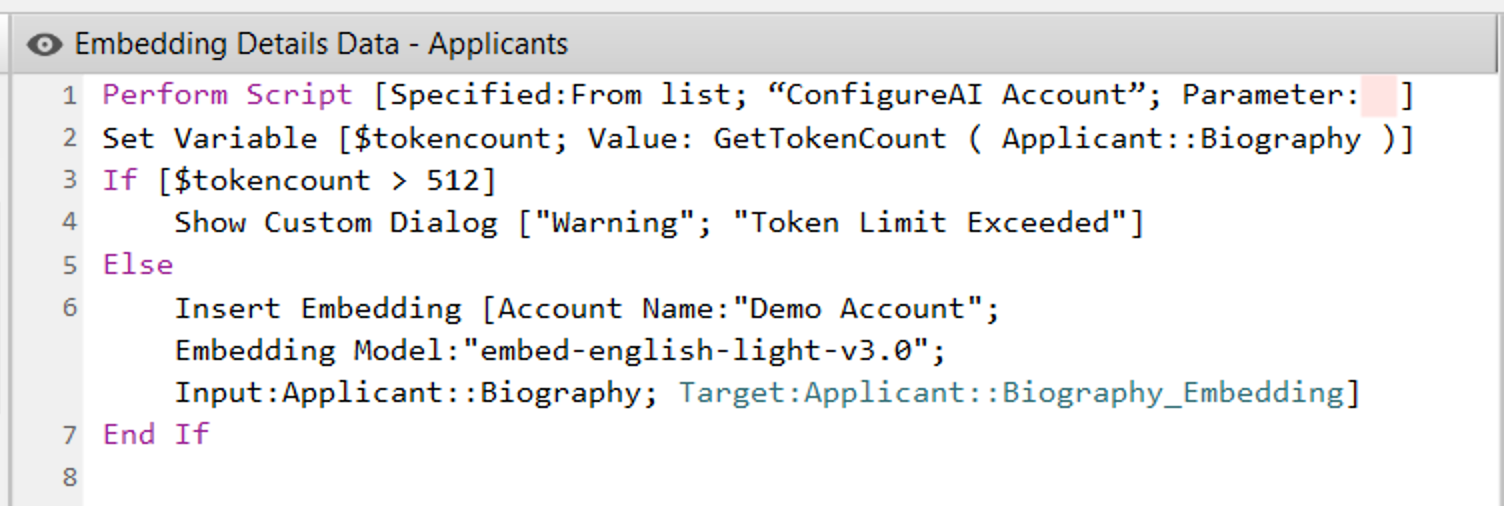

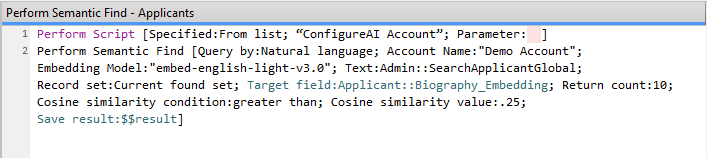

This first Script, 'Configure AI Account' simply contains the authentication information so that it can be run as a subscript each time the LLM account needs to be used. The 'Embedding Details Data - Applicants' takes the text from the 'Biography' (Text) field and then pushes the vectorised representation from the LLM into the 'Biography_Embedding' (Container) field. In practice you would probably want this to update every time the 'Biography' field is updated via a Script Trigger. Finally, the last Script, 'Perform Semantic Find - Applicants' takes the text from the 'SearchApplicantGlobal' (Global text field), uses the LLM to generate a vectorised representation which is then compared to the contents of the 'Biography_Embedding' field for the current found set of records. This is limited to only returning the 10 matches which only similarity which the LLM judges to be greater than 25%.

You now have a system that will that allows you to carry out semantic searches on the biography of applicants rather than just key words!

Technical considerations

Despite how magical semantic search can seem when it is correctly implemented, it does have some technical constrains which need to be considered in real world applications:

- LLMs have different pricing models and subscription fees. In most cases there will be an effective cost per token. While this may be trivial in our example for a handful of CVs/biographies to be embedded, but if you had millions of records then the costs would quickly escalate. Equally, you need to update the embedding whenever CV/Biography is updated - an additional on-going costs.

- AI systems offer multiple types of model optimised for different purposes. In the example from Cohere above the 'embed-english-light-v3' model is only suitable English language use and only supports up to 512 tokens being sent per embedding request. The GetToken() function can be used to estimate the no. of tokens that are in a given piece of text in advance of sending it to the LLM services.

If you don't know where to started with adding AI to your Claris FileMaker App , then why not contact our Team for a free initial consultation. We're here to help you achieve your business goals.