by Ben Fletcher

•

15 January 2026

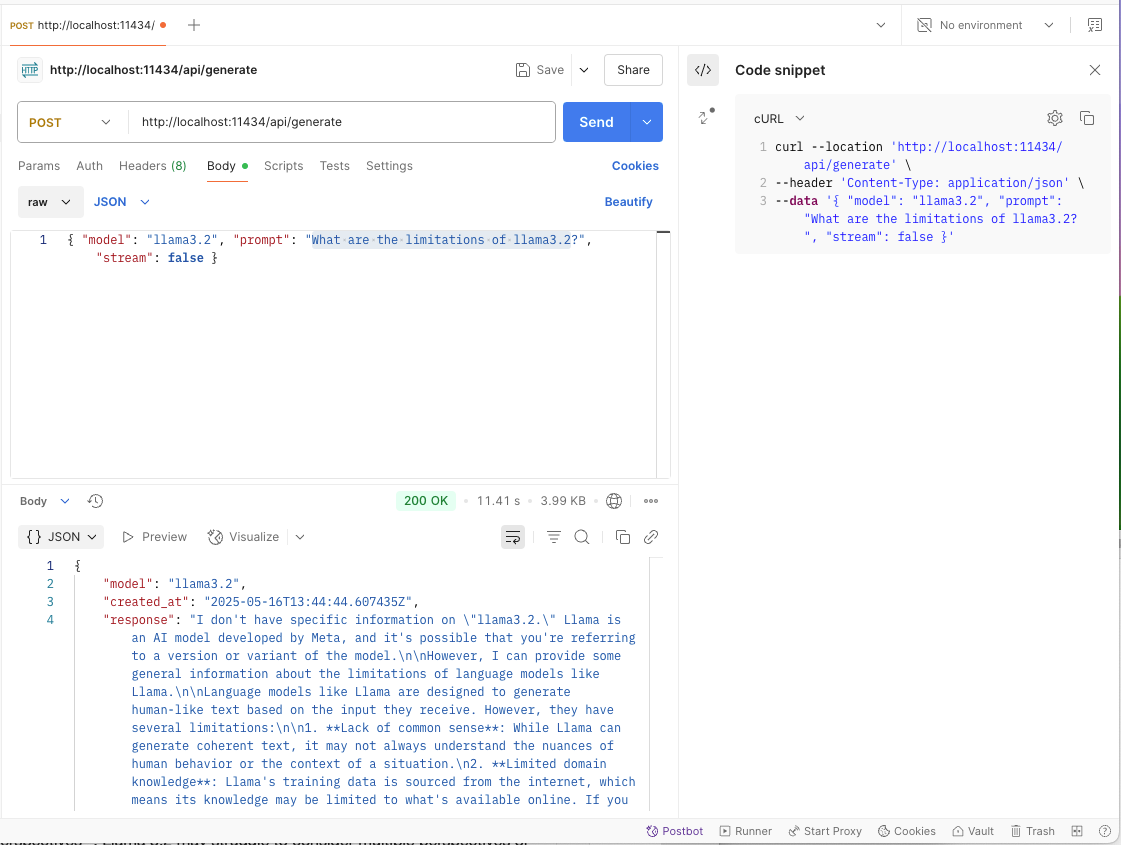

The work of planning, designing, developing and supporting custom solutions using the Claris FileMaker platform is a core part of DataTherapy's service, providing interesting, challenging and rewarding work for our Team since inception. To celebrate our 31st year of business, we asked our most veteran consultant developer, Jonathan Taberner and the most recent addition, Salman Javaherai to contrast their experiences and provide an insight for our customers as to what a working day looks like for a professional Claris FileMaker Developer in 2026. Background & Daily Workflow What does a typical day look like for you as a FileMaker developer at DataTherapy? SJ: A typical day starts with checking emails and reviewing the progress of any overnight tasks or scheduled scripts, just to make sure everything ran as expected. From there, I usually move between project planning, actual development, and the occasional quote or scoping session for a client request. There is always a satisfying mix of planning, collaboration, problem-solving and building. JT: I usually have some project work on the go but need to balance this with routine tasks such as monitoring server backups and exports and also be available for any requests which come in from our customers with support contracts. If the issue is urgent we look at it straight away – if not we reply and schedule it. If I have the choice I prefer to do the most challenging work in the morning when I am sharpest but doesn’t always work out that way! We all mostly work remotely – I am either in my home office or a shared office space in town. How do you balance client meetings, design, coding, and testing? SJ: For me, it’s all about managing and adhering to my focus levels. Design and testing require the deepest concentration, so I treat those like “deep work” sessions in the Cal Newport sense —time where I try to minimise interruptions and really think through the architecture. Pen and paper, screen off, is really handy here. Coding and client meetings, on the other hand, have more natural breaks and tend to be a bit more caffeinated. When I’m stuck on a tricky problem, I’ll take a short break to let ideas percolate; the elegant solution usually arrives once your brain gets some space. JT: Working as part of a team it is good to spread this out a bit. We usually have several developers on an initial client call and are able to discuss, proof read proposals and sense-check plans, etc. Likewise with testing we can ask another member of the team to test a solution as an end user – even better if they have not been involved in the development progress! What do you enjoy most about building solutions on the Claris FileMaker platform? SJ: Claris FileMaker lets you build almost like a painter paints—you can design the interface, model the data, and shape the logic all in the same space, without constantly jumping between separate tools or layers. In FileMaker, it all happens immediately, right in front of you, which makes the process feel fluid, creative, and incredibly satisfying for both the developer and the client watching it come to life. JT: I am still amazed and excited by how quickly we can build solutions using FileMaker. I enjoy the challenge of trying to produce an elegant solution – efficient coding with a view to speed and robustness of the solution. Best of all is delivering a solution which exceeds the client’s expectations so they are happy and looking forward to the next enhancements. Expertise & Platform Evolution Which version of FileMaker did you first start working with and what is the biggest change or feature that excites your about the most recent FileMaker 2024 & FileMaker 2025 releases? SJ: I started developing back in FileMaker 6, just before the first major change of multi table files was introduced in version 7. In the latest releases, the features that stand out most to me are the improved JSON parsing and the enhanced WebDirect features—especially things like being able to properly disable the back button. JT: The first version of FileMaker I had was version 3. I became a professional developer on version 7 in 2005. It has to be the new AI features in FileMaker 2025 – we are already putting these to good use and this is just the beginning! Which recent FileMaker feature has had the biggest impact on the way you build solutions (e.g., JSON, transactions, script enhancements, Layout UI improvements)? SJ: Transactions have had a huge impact. They let us handle bulk record changes in a safe, controlled way without writing so much defensive code. The Execute FileMaker Data API step is another great addition—it simplifies minor record operations and simplifies scripts. And JavaScript in web viewers has opened the door to more standardised UI components and advanced reporting. JSON took me a little longer to get used to. For a while I just implemented for very specific cases such as bulk record transfers, but now I probably use it in every script for more minor things. JT: JSON – for interaction with third party APIs and a great way to pass data within a solution (eg script parameters). How do you ensure solutions remain scalable, secure, and future-proof? SJ: I try to stick to fundamentals: use as many of the ‘traditional’ FileMaker features as possible, keep the architecture clean, and avoid unnecessary complexity. I’m also cautious about unstored calculations—useful in the right contexts but expensive if overused. I’m also conscious of the risks of “premature abstraction”. You don’t need to eliminate every repetition in a script or calculation if doing so makes the logic harder to read, maintain, or adapt later. FileMaker solutions are inherently fluid—clients often request rapid changes, and we need to deliver quickly—so chasing the “perfectly abstracted” or “textbook-clean” version can actually slow things down. I’ve been caught out with adopting new FileMaker features the moment they appear. New tools are great, but it takes experience to judge when they’ll genuinely save time versus when they add unnecessary complexity. JT: We periodically review solutions which we support for possible enhancements using new features. We design solutions to be scalable to large datasets but some issues still only become evident after the solution is well in use. We are flexible in our coding and pool ideas as a team to achieve greater database efficiency. Claris Stu dio & Connect Have you integrated Claris Studio into your projects yet? What’s been your impression—where does it really shine? SJ: Yes, I’ve tested out Claris Studio integration, for “fire-and-forget” web forms where users need to submit data without having a FileMaker account. The more recent addition of direct access to FileMaker tables makes configuration (not having to use Claris Connect as an intermediary) that much quicker. It’s great to have a truly anonymous and secure way into the FileMaker data. JT: I am currently not significantly using Claris Studio. It has great potential but I still see it as a work in progress. How do you see Claris Connect changing the way clients approach integrations? SJ: Claris Connect lowers the technical barrier for clients who want to link their systems with external services like Mailchimp. Instead of managing APIs or custom scripts, Connect offers a more hands-off, approachable way to automate processes. It brings integrations within reach for organisations that previously would have seen them as too complex. For administrators it’s nice to have the option of having some automated processes outside FileMaker Server. JT: It is very quick and easy to set up an integration using Claris Connect – we use this in combination with custom API solutions. The triggers in Claris Connect are particularly useful. Can you share an example of how you’ve used these tools to streamline client workflows? SJ: One example is an events management client who needed a simple way for staff to submit availability without a set of credentials. Using Claris Studio, we built a clean, login-free submission process that feeds directly back into their FileMaker system. It removed admin overhead and gave staff a frictionless way to provide data. JT: We set up an integration for a jewellery studio between their FileMaker database and Shopify. Triggering when orders change status and uploading product details and pricing. This uses a combination of Claris Connect and custom API coding.